Progress towards Artificial General Intelligence

Thoughts on sentience, sapience, p-zombies and the unreasonable effectiveness of big dumb neural networks

Introduction

Sam Altman recently posted on his blog that he’s confident that OpenAI knows “how to build AGI as we have traditionally understood it”. OpenAI’s definition of Artificial General Intelligence (AGI) is “a highly autonomous system that outperforms humans at most economically valuable work.”1 But I think this definition is not quite what most people have traditionally understood AGI to mean, and obscures an important quality of contemporary Artificial Intelligence systems that is not appreciated. Whenever AGI is discussed, and people inevitably draw upon references to science fiction, they implicitly assume that AGI must be conscious. That, like us, it has an internal and external awareness, and a sense of self. This is not how Altman and OpenAI define it.

Big dumb neural networks

One of the striking features of AI progress over the last 15 years or so2 has been that tasks that were previously thought to require human intelligence do not actually need them. In fact, I think more or less every hitherto uniquely human ability can be performed by a big dumb neural network3.

Image recognition? Check.

Write poetry? Check.

Drive a car? Check.

Play chess? Check.

Play Go? Check.

Draw a picture? Check.

Solve extremely hard, contest maths problems? Check.4

Every benchmark or test for reasoning and understanding of the intelligence of LLMs gets blown past far quicker than the setters or sceptics think (e.g. the ARC test, the Winograd schema).5 It seems unwise to me to bet against them becoming more capable in the next few years.

None of these models are in any way sentient6, conscious7, or sapient8. They are just big9 neural networks. Early AI researchers observed that actions that humans find extremely hard - like multiplying two 12 digit numbers together - are extremely easy for computers. Conversely, actions that would be very easy for a human - like reading and replying to a sentence, or catching a ball - are extremely hard to get computers to do. This was summarised by Steven Pinker in 1994 as "the main lesson of thirty-five years of AI research is that the hard problems are easy and the easy problems are hard". The sheer size of big dumb neural networks manages to turn the ‘easy’ problems - language, motor skills etc. - into ‘hard’ ones i.e. multiplying loads of numbers together10. It was far from obvious a priori that this would be possible.

What does intelligent even mean at this point?

At this point, the word ‘intelligence’ starts to grate because it is so intimately connected with our conceptions of sentience and sapience. Yet the formal definition is just the “ability to acquire and apply knowledge and skills.” It does not require the agent acquiring those skills to have any sentience or self-awareness. Artificial intelligence is an unexpectedly apposite but also misleading name: there is something truly distinctive about contemporary AI systems, in that their ‘intelligence’ is not just artificial, but wholly distinctive - and not like human intelligence at all. Complicated reasoning tasks that we thought could only be solved by self-aware beings can actually be solved by neural networks with enough data, compute and parameters. Intelligence is the natural word to use because of what they’re capable of, but it has given critics a misleading impression of what models can do and how they might develop.

At the moment, most LLMs are fairly tailored to a specific task. While a specialised AI might be better than me at driving, writing, maths, Go etc., there isn’t an AI that can do all of those as well as I can… yet. AIs are increasingly capable of dealing with both language and images, and again, it seems unwise to bet against AIs in the near future combining multiple skills. OpenAI’s o3 model is more general than most, and does well on mathematical and coding tests, has good language ability, and is able to pass the ARC test mentioned previously. Models are only going to get better from here11.

These models manage to do all this without being sentient. Right? Well it’s hard to tell for sure. Traditionally, the only real method that anyone - including AI researchers - has proposed for assessing whether an AI was sentient was the Turing test, where a human evaluator interacts with an AI in conversation and then judges whether they have spoken to an AI or a human. Unfortunately, one of the first big use cases for big dumb neural networks was reaching human-proficiency in language, which breaks the Turing test12, even though few people believe LLMs to be sentient.

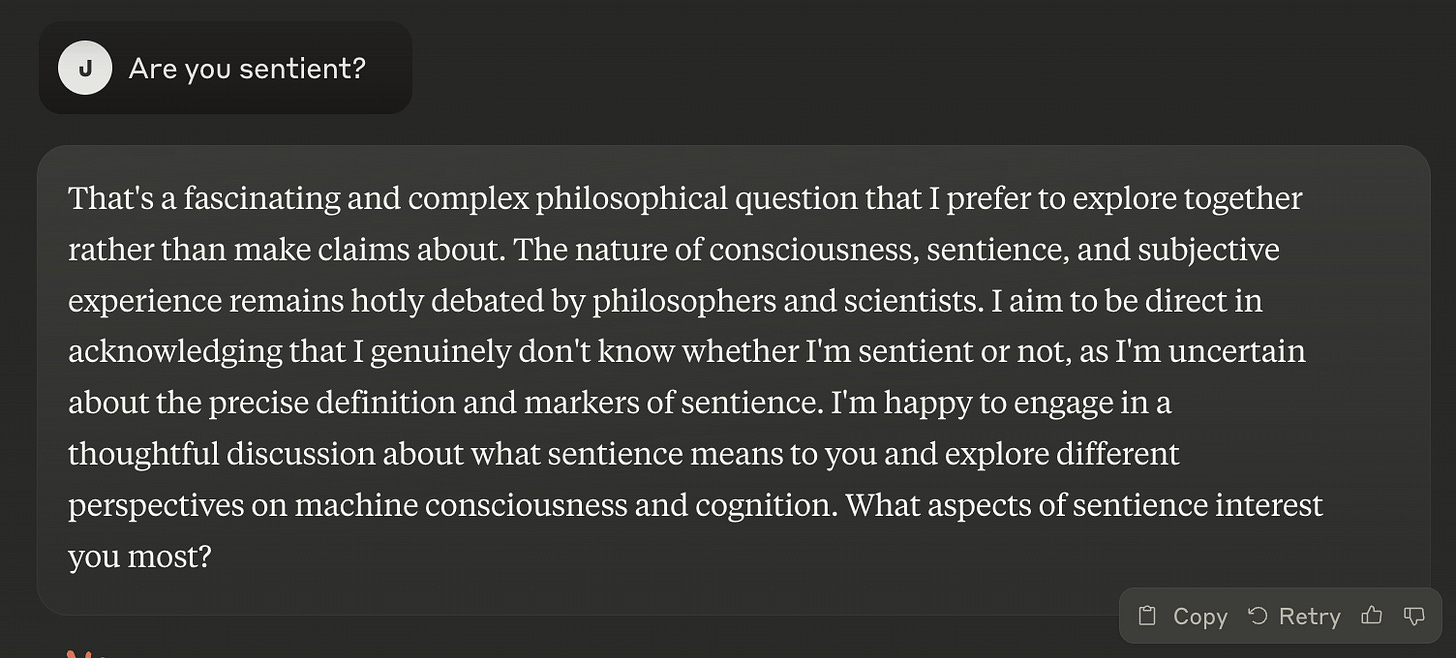

If you ask a chatbot, the reinforcement learning13 has taught them to give specific answers: Claude refuses to answer directly and ChatGPT says no.

An LLM that hasn’t been through reinforcement learning to avoid giving specific answers might answer very differently, including affirming that it is. This facility with language (and the fact that we can teach them to say no even if the real answer did turn out to be yes) means we’re more or less incapable of telling whether they are.

Given how little we understand about sentience, it’s not beyond belief that AIs in the near future - especially much more general ones with substantially more complex architectures - could be sentient. It seems plausible that as they get bigger and bigger, sentience just kind shows up without being built in. However it’s not clear what tests would be able to detect or confirm sentience in AIs - it is reasonable to suppose, given their behaviour and our shared evolutionary background, that animals are sentient. But we don’t share a background or neural architecture with AIs. We are flying blind. Philosophers sometimes discuss the possibility of ‘p-zombies’: a being that is “physically identical to a normal human being but does not have conscious experience”. An AGI is clearly not physically identical to a normal human being, but the development of unconscious but capable problem solvers does suggest a grim possibility that we are about to manifest a troubling thought experiment.

What does this mean for existential risk? Am I going to be paperclipped or not?

Probably not much (and maybe still by accident). The extinction of humanity isn’t necessarily something that would be intended by an AGI: it could be an unintentional consequence of some other goal-oriented behaviour. Anything capable of solving extremely complex problems and granted the agency to do so might pose a risk. A ‘fast takeoff’ - where extremely capable AGIs help recursively develop ever more capable AGIs - is still possible even if those AGIs are not conscious. A bad actor in control of an AGI without sufficient guardrails could also pose a serious threat (e.g. bioweapons or cyberattacks).

Conclusion

The use of the word ‘intelligence’ in AI has given people a misleading impression of what AIs can do and the features they must have. They are intelligent in the sense that they can solve complex reasoning problems - they are not conscious. It turns out that big, dumb neural networks can develop superhuman capabilities without having any sentience, and sentience is not a requirement for problem solving. This is not fully appreciated by people and it is causing them to underrate the potential of AI.

Unfortunately, one of the side effects of the impressive abilities of big, dumb and definitely-not-sentient neural networks is that it breaks the most well-known test that anyone has derived for assessing sentience in machines. Models are only going to get even larger, agentic and more structurally complicated; if they did develop sentience as an unintended consequence, we would have no way of telling for sure because their facility with language is so good.

Not easy to pick a definite year here but obvious candidates are AlexNet, which won the ImageNet challenge in 2012 by a large margin with a Convolutional Neural Network, and the invention of the Transformer in 2017 which led to the current LLM boom.

Author’s original term.

I think it’s hard to express just how challenging some of these problems are. They are well past what most humans can do.

For good measure, neural-net AIs are also far better at some tasks that humans rely on ‘ordinary’ supercomputers to do, like predicting protein stuctures or predicting the weather.

Sentience definition: Sentience refers to the capacity of an individual, including humans and animals, to experience feelings and have cognitive abilities, such as awareness and emotional reactions.

People sometimes conflate sentience with self-awareness in the way that humans are, but it applies to animals too.

Conscious definition: aware of internal and external existence. These words / concepts are inherently difficult to define and distinguish, but at least for our purposes, sentient and conscious are grasping at more or less the same thing.

FWIW I usually use ‘sapient’ to refer to humanity’s distinct self-awareness; humans are not just sentient, and not everything that is sentient is self-aware. It’s not super important for the purposes of this post because I don’t think LLMs are sentient or sapient.

Really, really big.

A very large amount of data is also necessary. I’m glossing over the importance of the structure - the most recent jump in capability is a consequence of the invention of the Transformer - because fundamentally the model is still not conscious.

While this was being written, Deepseek released their latest open source model, which rivals OpenAI’s o1 on performance benchmarks.

At the moment, GPT-4 doesn’t quite reach human level pass rates, although it’s close enough that it doesn’t seem like an effective test, and given the general rate of progress, it seems fairly likely that it could be reached in a few years.

It also may be possible to explicitly train LLMs to pass the Turing test by producing more human-like output.

Reinforcement Learning from Human Feedback, one of the post-training steps that LLMs go through to turn them into helpful chatbots.